My talk about rolling out the Gorgias AI Agent at SaaStr Annual 2024 in the San Francisco. I’m sharing a bit what happens under the hood and specifically lessons learned during the release.

Scaling engineering at Gorgias

A talk about how we scaled our engineering team at Gorgias, cognitive load theory for organizing teams, when to split a team, what to look for in great engineering managers and a few other topics.

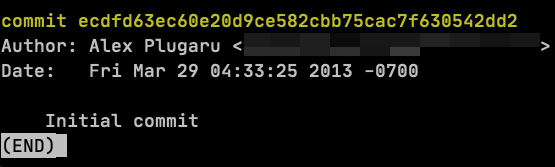

Big things have small beginnings

Ten years ago, on a Friday night, in a small studio at the edge of Paris, I wrote the first lines of code that started the Gorgias adventure.

Why was I coding a customer service solution? I volunteered to help with customer service at Gymglish to understand our customers and support process better. I still believe that doing customer service makes you at least a better engineer. Everyone should do it. Antoine and Ben (our first investors) encouraged me to improve things, and I had tried a few helpdesks, but they were expensive and complicated for Gymglish.

Enter Gorgias. The idea was simple: Write faster on the internet with templates.

Ionut helped me with initial iterations. Soon after, I met with our now-CEO, Romain, and the small project became a bigger idea, then a startup with customers, and eventually, a fast-growing company.

In late 2015, we got accepted to accelerators in Numa, in Paris, then joined Techstars NYC, where Alex Iskold was our sponsor and investor.

Soon after, we raised our seed round from CRV, with Murat Bicer leading the round. Next, we moved to San Francisco, where we managed to find product-market fit over the next 18 months with Louis, Martin, and Jean-Elie.

Once we got to around $10,000 MRR, Aasif joined, and Jason Lemkin from SaaStr provided additional funding when we most needed it. Jason has guided us since then, investing in all the following rounds!

Today, we are proud to serve around 12,000 ecommerce merchants, improving the customer experience for hundreds of millions of shoppers. I’m extremely fortunate to work side-by-side with 250+ talented and hard-working Gorgians to accomplish these amazing things.

Looking back, it’s clear that this was a series of winning lottery tickets that cannot be replicated. Gorgias exists because many people (including many I didn’t mention) gave Romain and me a chance.

This is how startups are made. Day by day, year by year, with lots of luck, leaps of faith, and hard work from many people.

I’m writing this for those who took the first step and entered the entrepreneurial arena. I tip my hat to you, my brothers and sisters! Expect the road to be long and arduous, but nothing worth doing is ever easy.

All big things have small beginnings

…and we’re just getting started.

Gorgias's history, mission and what's next

Big thanks Ramon Berrios & Blaine Bolus for inviting me to talk about Gorgias’s history, it’s mission and what is coming next.

Engineering management books

If you’re like me (first time manager in a fast growing software company) you’re likely facing a vast number of organizational issues that you never faced before and they are coming at you faster than you can learn how to deal with them.

Thankfully there are smarter, more experienced people out there that figured out a lot of topics that you can copy, claim credit and become the leader that you always dreamed out to be and your company desperately needs!

My list of books I wish I knew about before scaling Gorgias

If it’s not immediately obvious, the book selection and the order below is opinionated. I’m starting with the leadership and culture books first, then going into management fundamentals, engineering manager career orientation, hiring engineers, operational best practices, writing strategy and finally scaling teams and productivity.

First fundamentals, then tactics.

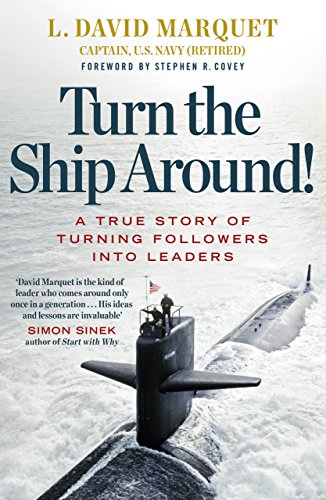

Turn The Ship Around!

My personal take on the whole leader-follower dichotomy is that it's abused in our industry. Being a follower: bad! Being a leader: good!

Great leaders figured out when to follow and empower people in your team and when to lead. The art of moving between these modes is what separates noobs from experienced and effective leaders. This is how trust is built and how you encourage growth, ownership and motivation in people.

IMO the best part about this book is that's it's **not boring**! If you read enough management and self-help books you know what I'm talking about.

No Rules Rules

Allow me to put it less diplomatically in steps:

- First you aggressively fire anyone that is not a "top performer".

- Then you pay top of the market salaries to those who are left and recruit the "best" people.

- Remove redundant bureaucracy because you hired the "best" and they hate stupid rules that don't bring any value.

- Make sure that they know they own their shit, they are expected to take risks and will get fired if they don't get results on time.

The book is full of anecdotes of the CEO and interviews from the various employees that make the case for the above.

My recommendation is to take the ideas here with a giant grain of salt, it's likely not something that applies to your seed-stage B2B SaaS startup where cash is in limited supply and you have commission based roles.

Why do I recommend this book then?

Because I think it helps to think about how you should treat product, engineering and design roles. Why it's worth paying the top dollar to get the best possible engineers. Why your designers should not have to jump through ridiculous hoops to get their best work done and why your product people should be empowered to take risky but calculated decisions.

I think it applies to marketing and other "scalable" roles, but that's not my place to comment.

The Manager’s Path

Smart and Gets Things Done

Is the candidate smart and can they get things done?

Today I would add cultural fit: it’s important because you cannot change someone’s values or personality. The point the book is making is that you should not hire academics that are smart, but never get anything finished. Nor should you hire people who work a lot, but make dubious decisions and constant mistakes.

This book forced me to answer the same two questions after every interview: Are they smart? Can they get things done?

Not sure? Not a good idea to hire.

Accelerate

Introducing DORA metrics - read more [here](https://www.swarmia.com/blog/dora-metrics/).

- **Deployment frequency**: How often a software team pushes changes to production

- **Change lead time**: The time it takes to get committed code to run in production

- **Change failure rate**: The share of incidents, rollbacks, and failures out of all deployments

- **Time to restore service**: The time it takes to restore service in production after an incident

Should you use these metrics and draw immediate conclusion if you team is amazing or sucks? Metrics without the context are a huge danger, but can trigger valuable investigations and gaining more understanding.

I recommend supplementing this book with [Swarmia](https://www.swarmia.com/). I have no affiliation with them. Just a fan.

Team Topologies

From Jacob Kaplan-Moss's [blog](https://jacobian.org/2021/jul/5/book-review-team-topologies/) which goes in more detail:

The main thesis of the book is to engage in “team-first thinking”:

We consider the team to be the smallest entity of delivery within the organization. Therefore, an organization should never assign work to individuals; only to teams. In all aspects of software design, delivery, and operation, we start with the team.

It covers four common patterns for teams:

- Stream-aligned teams, that are aligned to a single delivery stream, such as a product or service (what others might call a “product team” or a “feature team”).

- Enabling teams, specialists in a particular domain that guide stream-aligned teams

- Complicated-subsystem teams that maintain a particularly complex subsystem, such as an ML model

- Platform teams that provide internal services like deployment platforms or data services

An Elegant Puzzle

Then it gets into processes and various rituals that are common in scaling orgs.

Finally gets into some work principles and culture ending in hiring and career growth.

I couldn't pinpoint a single thing about why I like this book. It addresses a lot of issues that I'm having right now at Gorgias.

That’s it for now! In the future I will update the above list by adding or removing. I’ll try to keep the list to less than 10.

Word of advice:

Supplement your book reading by meeting leaders in your space, getting an executive coach, listening carefully to your team and customers and mentoring people. There are many ways to learn, books, podcasts and blogs posts is just one way. Arguably not the best way.